Introduction

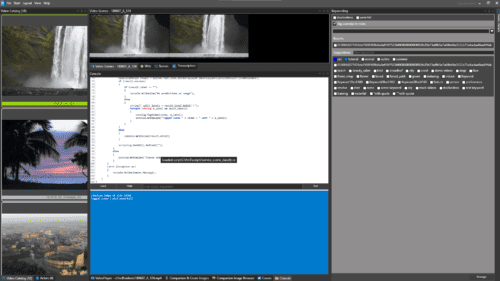

I will show you how to extend Fast Video Cataloger using the C# interface and a rest web service to classify scenes using machine learning AI.

Fast video cataloger is a local video asset management software you can extend using a C# based Api. This example shows how to use the CodeProjects AI server to classify video images in a FVC catalog.

Requirements

This example requires the latest version of Fast video cataloger ( https://videocataloger.com ) and the free code project ai server ( https://www.codeproject.com/AI/ ). When the code project server is installed, you must also install the Scene Classification module. Once installed the service URL should be http://localhost:32168 ( as of march 2024 this is the default) and if you enter that URL in a web browser you should get to the server’s status page if it is properly installed. Wait for the modules to be downloaded and installed and then click on the installed modules tab, find Scene classification and click Install. Note that download and installing the module can take a while, before installation is complete the FVC script will timeout and fail.

The FVC script

Download the complete script from here. In Fast Video Cataloger, open the script tab, click the Load button, find the downloaded script, and load it into the software. Click on a video and click run on the script. The script will upload the poster image for the video, classify it using the code project AI server, and then write the result from the classification as keyword tags to the video. If there are any errors, they will be output in the script console in Fast Video Cataloger or on the server logs tab on the AI server.

Using machine learning o classify a scene in FVC

Possible extensions to the script

This script uses the poster image. You might also iterate the thumbnails from the video and classify the images.

With the CodeProject AI server you also have several yolo (You only look once ) models that can detect objects in images.

Remember that you can assign buttons and shortcuts to scripts.

How the script works

Here is a breakdown of how the script works,

//css_ref System.Memory; //css_ref System.Text.Json; using System.Collections.Generic; using VideoCataloger; using System.Runtime; using System.Net.Http; using System.Net.Http.Headers; using System.Linq; using System; using System.Threading.Tasks; using System.Text.Json;

The first section declares what APIs we will use in the script. The comment with css_ref is not just a comment, it is important and loads modules that are not included by default. css_ref in a c# comment is a directive to the FVC script engine.

public class ClassifyResult

{

public bool success { get; set; } // True if successful.

public string label { get; set; } // The classification of the scene such as 'conference_room'.

public float confidence { get; set; } // True if successful.

public int inferenceMs { get; set; } // Time (ms) to perform AI inference

public int processMs { get; set; } // Time (ms) to process the data

public string moduleName { get; set; }// Time (ms) for round trip to analysis module

public string command { get; set; }// Time (ms) for round trip to analysis module

public bool canUseGPU { get; set; }// Time (ms) for round trip to analysis module

public int analysisRoundTripMs { get; set; }// Time (ms) for round trip to analysis module

public string error { get; set; }

}

This part declares a class that mathes the json format of the rest api we are using. In our case the JSON looks like this:

{

"success": (Boolean) // True if successful.

"label": (Text) // The classification of the scene such as 'conference_room'.

"confidence": (Float) // The confidence in the classification in the range of 0.0 to 1.0.

"inferenceMs": (Integer) // The time (ms) to perform the AI inference.

"processMs": (Integer) // The time (ms) to process the image (includes inference and image manipulation operations).

"moduleId": (String) // The Id of the module that processed this request.

"moduleName": (String) // The name of the module that processed this request.

"command": (String) // The command that was sent as part of this request. Can be detect, list, status.

"executionProvider": (String) // The name of the device or package handling the inference. eg CPU, GPU, TPU, DirectML.

"canUseGPU": (Boolean) // True if this module can use the current GPU if one is present.

"analysisRoundTripMs": (Integer) // The time (ms) for the round trip to the analysis module and back.

}

As you can find in the api documentation here: API Reference – CodeProject.AI Server v2.5.3

static async TaskPostRequest(string ServiceRootUrl, string ServiceEndPoint, MultipartFormDataContent form ) { HttpClient client = new HttpClient(); client.BaseAddress = new Uri(ServiceRootUrl); client.DefaultRequestHeaders.Accept.Clear(); client.DefaultRequestHeaders.Accept.Add(new MediaTypeWithQualityHeaderValue("application/json")); HttpResponseMessage response = await client.PostAsync(ServiceEndPoint, form); string jsonResponse = await response.Content.ReadAsStringAsync(); return jsonResponse; }

The post request function implements a post request to the Api, gets the response and then parses the string to the ClassifyResult class.

Finally the main script that is called when you click the run button:

static public async System.Threading.Tasks.Task Run ( IScripting scripting, string arguments )

{

var catalog = scripting.GetVideoCatalogService();

var console = scripting.GetConsole();

console.Clear();

try

{

string ServiceRootUrl = "http://localhost:32168";

List selected = scripting.GetSelection().GetSelectedVideos();

if (selected.Count() == 1)

{

long video = selected[0];

byte[] image_data = await catalog.GetVideoFileImageAsync(selected[0]);

MultipartFormDataContent form = new MultipartFormDataContent();

form.Add(new ByteArrayContent(image_data, 0, image_data.Length), "image", "videoimage.jpg");

console.WriteLine("Posting image of size " + image_data.Length );

string jsonResponse = await PostRequest(ServiceRootUrl, "/v1/vision/scene", form);

ClassifyResult result = System.Text.Json.JsonSerializer.Deserialize(jsonResponse);

if (result.success)

{

if (result.label == "")

{

console.WriteLine("No predictions on image");

}

else

{

string[] split_labels = result.label.Split('/');

foreach (string a_label in split_labels)

{

catalog.TagVideo(video, a_label);

console.WriteLine("Tagged video " + video + " with " + a_label);

}

}

}

else

{

console.WriteLine(result.error);

}

scripting.GetGUI().Refresh("");

}

else

{

console.WriteLine("Please select one video");

}

}

catch (Exception ex)

{

console.WriteLine(ex.Message);

}

}

Here we are getting the video id of the selection, then getting the jpeg image with GetVideoFileImageAsync(). Then, we use the MultipartFormDataContent to create a form post request with the image. Call our utility function PostRequest that will post the image to the server and get back the result. Finally, we need to take the tag string from the result and tag our video.